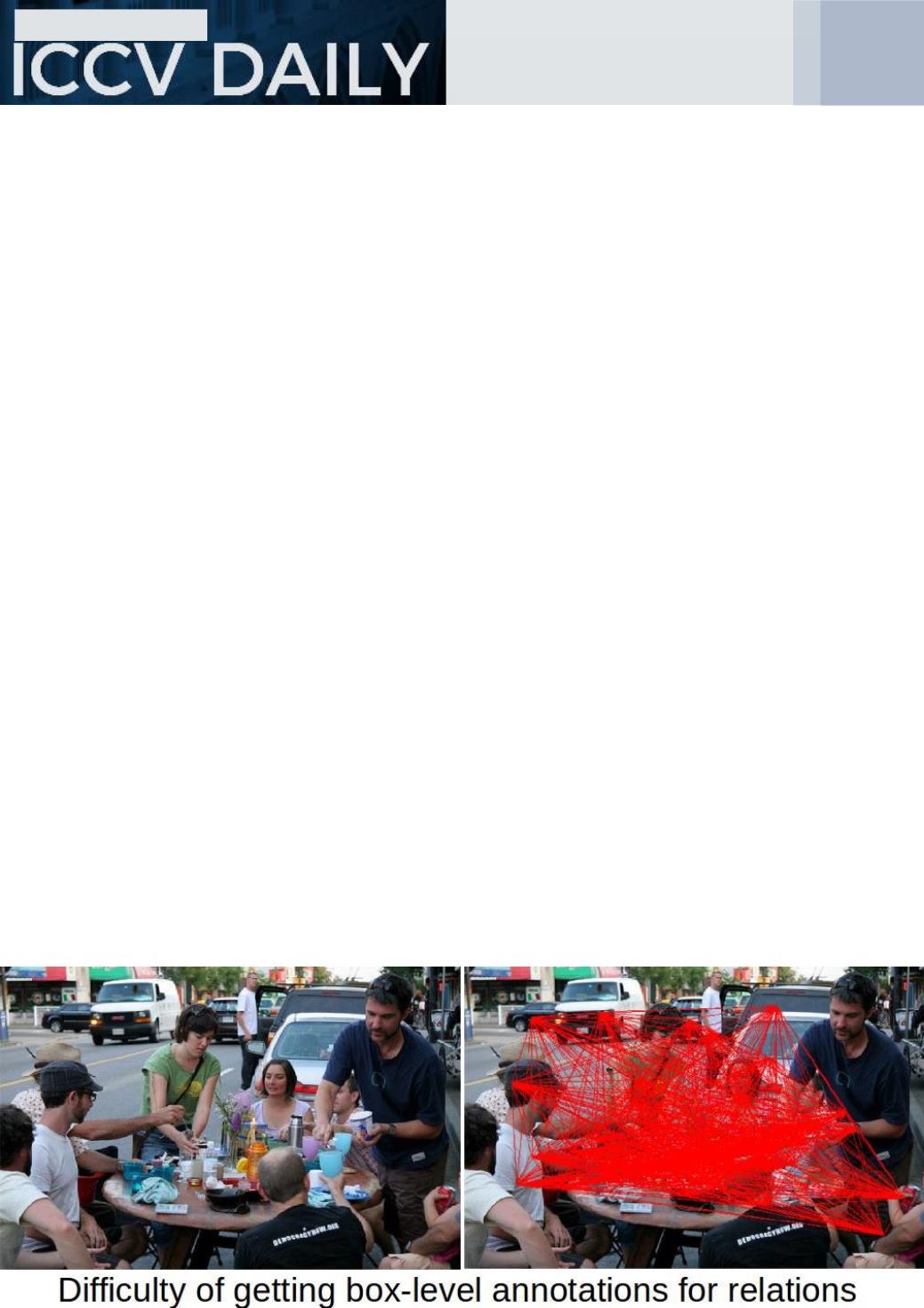

to learn with full supervision these

kinds of relations, you would have to

annotate all the relations between the

objects in an image. Getting this kind of

annotation is very expensive, because

the total number of annotations you

would have to get for one image is n2,

where n is the number of objects in

your image.”

The main benefit of this is that they just

require annotation at image level, so

people don’t have to draw the boxes

between all objects and annotate the

relations for all of those pairs of

objects.

Julia tells us their method is in two

stages. The first stage is to get

candidate objects for images. For this,

they use a standard object detector.

Then they have these candidate objects

as proposals and want to learn the

relations between them. For this, they

use a method called discriminative

clustering, which is a very simple

framework developed by

Francis Bach

and

Zaïd Harchaoui

. It is a very flexible

method which allows them to

incorporate constraints very simply.

Julia says the next step is to move

towards using more natural language.

Right now, they require image-level

triplets.

They are constraining

annotation to be in the form of triplets

inside a limited vocabulary – a fixed

vocabulary for object and a fixed

vocabulary for relation – so the next

step is to learn directly from captions.

On the internet, if you want to use web

data, you will encounter natural

language, not triplets.

Julia concludes by saying: “

I would like

to advertise a new dataset that we

introduced which is called UnRel for

unusual relations. This dataset also

answers the difficulty to get

annotations at box-level, but this time

at test time, because you encounter a

lot of missing annotation for evaluation,

that would introduce noise at

evaluation. To solve this problem of

missing annotations at test time, we

introduce a dataset of unusual

relations. For example, a dog riding a

bike or a car in trees. The advantage of

using this dataset for evaluation is that

you will have a reduced level of noise in

evaluation. You can now evaluate with

retrieval, without worrying about the

problem of missing annotation

.”

If you want to learn more about

Julia’s work, come today (Friday)

to her oral at 13:30 and her poster

at 15:00.

9

Friday

Julia Peyre