Localizing when and where the

actions take place in a video. For

example, you have somebody that is

diving in this amazing swimming pool

and you want to find out where

exactly this human is, spatially on the

frame-level, and when his action

starts and ends temporally. Until

today, state of the art works focused

on tackling the problem more at a

frame-level. They use per frame

detectors that detect the actions at a

frame level, then they link the actions

over time to create spatio-temporal

tubes. There is a lot of good in this

method and there have been

improvements over the years, but it

has some basic disadvantages. It

doesn’t exploit the temporal

continuity of

videos.

Imagine

somebody in this position. Am I sitting

down or standing up?

I think you are standing up with your

knees bent.

I am sitting down right now.

You are. What’s the story?

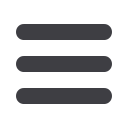

We propose to surpass this limitation

and instead of working on a frame

level, we work on a sequence of

frames. We propose an action-tubelet

detector that takes a simple sequence

of frames and outputs tubelets. In the

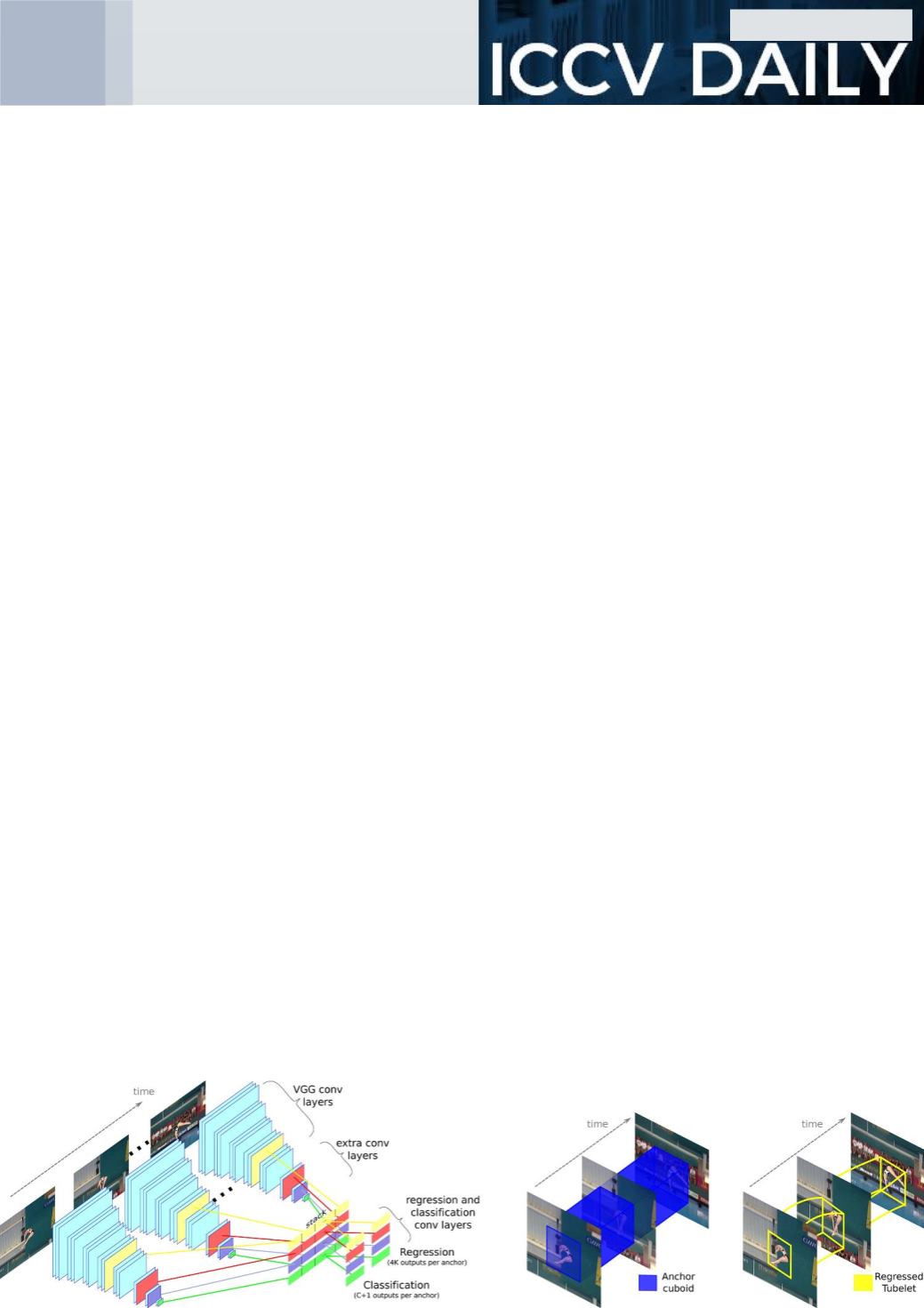

way that standard object detectors

work, we extend the anchor boxes of

standard detectors to anchor cuboids

that have a fixed spatial extent over

time. We regress the anchor cuboids

in order to follow the movement of

the actor. We try to say, where the

actor is, it will be like this, going up

and down. This is the regression part,

and the classification – which basically

means to put a label on what

somebody is doing – like running,

kicking a ball, or any action label. To

do so, given sequences of K frames,

we deploy K parallel streams, one for

each of the input frames. We learn

our action tubelet detector jointly for

all parallel streams, where the weights

are shared amongst all the streams. In

the end, we concatenate the features

coming from each stream, then we

classify and regress the anchor cuboid

to produce the tubelet, which is what

we want.

What is interesting there is that the

features are learned jointly; however,

the regression is done per frame from

features that are learned jointly over

the whole sequence.

The

classification, putting the action label

into the sequence, is consistent over

the whole tubelet. That enforces

consistency of the actions over time.

What feature would you add to the

model that it doesn’t have today?

I could tell you millions of them! What

matters though is what I think people

who work on action localization or

18

Friday

Vicky Kalogeiton