Industrial robots used in module assembly or perform pick and place tasks may need to identify the parts using text embedded upon them. Autonomously navigating robots may use text in road signs or signboard landmarks. In all these are cases the text to be read imposes challenges to the OCR system.

Best case scenario of OCR for Robots

The easiest cases are those in which the robot’s camera is mounted on a multi joint arm with many degrees of freedom. If possible, the camera is best positioned where the text which needs to be recognized is straight and fills the whole frame: this condition provides as many details as possible. In this case, the machine vision system is used to guide the robot and camera into the desired position and orientation. First, if the text location is not specified (in the high definition map), the potential position of text in the frame is located. Today, a deep learning classifier can show a potential text location – and even perform the recognition task. The outcome of this classifier can be used to guide the robot to locate the camera in the optimal position to read the text.

Less optimal conditions of OCR for Robots

In cases where the camera is fixed-mounted on the robot assembly, we need to first locate the potential text in the image, as before. However, in this case, we are limited to the existing camera location – which will make the text recognition more challenging: in fact, it will be extracted from the image with lower resolution and it may suffer from perspective and other deformations.

Having the text inside a frame is still not enough to ensure adequate OCR performance. The text is not a well-defined, black colored, font printed on white background. Many types of fonts are used in many colors. Some fonts are well defined, while others may suffer from partial lack of segments. Some printers use dot matrix: dots marked by these impact printers are spaced closely in a particular shape to form the intended character, but the connection between them is loose or none. Sometimes a portion of the text is printed over other parts, making it more difficult for the robot to read correctly. The background is not always white: it may include some pattern, image or color. The surfaces on which text is printed are not always flat – they might be curved, like in the case of bottles (medicine, beverage, etc.). These challenges to OCR for robots need to be taken into account when devising the optimal solution.

RSIP Vision’s approach to OCR for robots

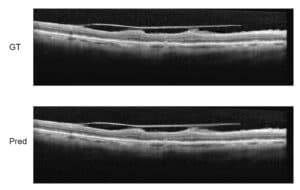

RSIP Vision decided to have a fresh, original, approach to handle such challenges. Rather than developing a complex OCR to handle the specific cases, RSIP Vision developed a powerful pre-process technique that enhances the image quality and resolves deformations upfront, allowing off-the-shelf OCR packages to be used for text recognition.

To achieve the powerful image pre-processing for text enhancements, RSIP Vision uses both classic and modern machine vision technologies. Classic methods include various adaptive thresholding technologies, morphological operations as well as heuristic operations for filtering noise and selecting text parts. Other cases require more sophisticated techniques: in such cases RSIP Vision chooses to implement deep learning training for text detection and classifier generation to successfully accomplish the OCR for robots task and OCR projects in general.