Tissue sparing is a common practice during surgeries. This approach aims to remove as little as possible of the surrounding tissue during a procedure. Studies have shown that tissue sparing procedures have fewer complications and faster recovery time.

In urology, tissue sparing is relevant in many surgical procedures, such as prostatectomy or cystectomy. Often a tumor needs to be removed, and it is preferable to preserve as much tissue without leaving cancer-cells behind. In prostatectomy by sparing tissue the neurovascular bundle (NVB) damage can be avoided, reducing the complication rate. Female cystectomy is usually accompanied by removal of surrounding organs, and can also benefit from tissue sparing.

Tissue sparing requires techniques: minimal incisions for surgical access (i), extreme precision for tumor removal (ii), and steady handling of surgical tools (iii). Below we will discuss how implementation of artificial intelligence (AI) and computer vision can improve these techniques and assist in tissue sparing.

(i) When attempting to minimize the entry port in a laparoscopic or open procedure, there are several challenges. The field of view and the tool’s operating range will be limited. When the field of view is limited, it is difficult to navigate the surgical scenery and recognize anatomical landmarks. Deep learning networks can be trained to detect specific landmarks and highlight them on the screen, assisting in recognition during the procedure. Additionally, utilizing the pre-op CT or MRI scan, a detailed procedure plan can be devised by segmenting and modeling the relevant anatomical structures, and the ideal incision position can be determined. This plan can be registered with intra-op imaging to verify incision location. Developing such modules is done using a combination of classic computer vision algorithms and deep learning.

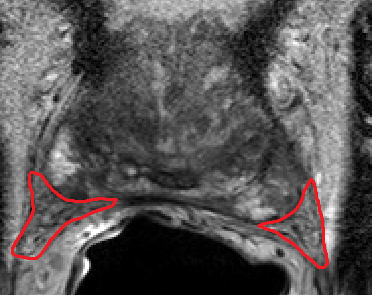

(ii) Removing a tumor, whether radical or partial removal, requires precise dissection of the tissue. The tumor needs to be removed fully, with minimal damage to surrounding healthy tissue, vasculature, and nerves. Such precision can be enhanced by fusing pre-op images from CT or MRI with the tumor segmentation, with the intra-op video or ultrasound (US) images. The surgeon can view in real-time the surgical plan and perform accordingly. The pre-op segmentation can be easily obtained using neural networks trained to segment tumors. The registration process can be achieved using deep learning techniques and classic computer vision, and further improved using existing or wearable landmarks designated for image registration.

(iii) The above described solutions assist in visualizing the tumor throughout the procedure. However, accuracy still depends on the tool-handling capabilities of the surgeon. To neutralize this effect, robots can be introduced. Robotic assisted surgeries (RAS) are becoming increasingly common. As expected, the robot’s “hand” is more stable than the human hand, and can perform these surgeries with increased accuracy and precision. To induce robotic accuracy, key points within the anatomy can be selected, and the tools’ position relative to them can be calculated. This information provides real-time notifications of proximity to the tissue and warns against undesired resections. By using tool tracking algorithms – a well established method which segments the tool in the field-of-view and utilizes prior knowledge of camera and tool characteristics to accurately position the tool in space – tool tracking is achieved. The robot can use this information to accurately maneuver the tool while avoiding unnecessary incisions. This can also be done using electro-magnetic (EM) tracking – a designated EM sensor is attached to the tool and using an external EM field the tool’s position is recorded continuously. Further developments in this field may also register the robot’s coordinate system with the patient’s, providing more accurate positioning relative to the anatomy. Now the surgeon can approach the surgical procedure with high accuracy and stability, ensuring minimal surrounding damage, and sparing healthy tissue.

Combining these methodologies can aid in tissue sparing during urologic procedures. A smaller port in an ideal position can be achieved without compromising the anatomical understanding of the surgical scene, the surgical target can be accurately viewed in real-time, and introduction of RAS will reduce human-error, ultimately leading to less damage to healthy tissue. These solutions are challenging to implement, and advanced knowledge in AI and computer vision is essential for developing them. RSIP Vision has vast experience in developing computer vision solutions, and adequate staff for this mission. Contact us for a speedy development process and faster time-to-market.

Urology

Urology