Classification problems in image and signal analysis require, on the algorithmic side, to take into account complex information embedded in the data. Images might contain many thousands of pixel values in several color channels; their correlation and relationship characterizes the class and enables drawing a separation criteria from other classes. It is generally non-feasible to integrate all this information in reasonable running time for classification problems. Therefore, image and signal features are extracted as a representatives of each object and its class. These features, be they boundary representation like Fourier descriptors, Harris corners or Gaussian-like peaks, form a lower dimension representation of the object and fall within a characteristic region in the feature space, hopefully differentiated enough from objects in other classes but similar to objects of the same class.

Image features are, loosely speaking, salient points on the image. Ideally, features should be invariant to image transformations like rotation, translation and scaling. In the context of classification, features of a sample object (image) should not change upon rotation of the image, changing scale (tantamount to resolution change, or magnification) or changing acquisition angle. However, these invariances are not without a limit. Furthermore, features should be insensitive to lighting conditions and color (unless specifically required).

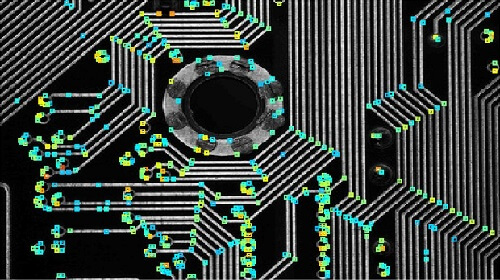

Well known examples of image features include corners, the SIFT, SURF, blobs, edges. Not all of them fulfill the invariances and insensitivity of ideal features. However, depending on the classification task and the expected geometry of the objects, features can be wisely selected. For example, on a PCB board, which embodies well-defined geometrical shapes, corner features might be a good starting point. Harris corners, computes the 2-d eigenvalue of the Hessian of the image (partial derivatives calculated convolving the image with Gaussian kernel) and according to their value determines a “cornerness” metric (see figure below).

Image features are, loosely speaking, salient points on the image. Ideally, features should be invariant to image transformations like rotation, translation and scaling. In the context of classification, features of a sample object (image) should not change upon rotation of the image, changing scale (tantamount to resolution change, or magnification) or changing acquisition angle. However, these invariances are not without a limit. Furthermore, features should be insensitive to lighting conditions and color (unless specifically required).

Well known examples of image features include corners, the SIFT, SURF, blobs, edges. Not all of them fulfill the invariances and insensitivity of ideal features. However, depending on the classification task and the expected geometry of the objects, features can be wisely selected. For example, on a PCB board, which embodies well-defined geometrical shapes, corner features might be a good starting point. Harris corners, computes the 2-d eigenvalue of the Hessian of the image (partial derivatives calculated convolving the image with Gaussian kernel) and according to their value determines a “cornerness” metric (see figure below).

Descriptors of feature points accompany the feature extraction and are used to compare between features extracted from different images. In addition, descriptors such as those of the SURF features allow us to link an object to a specific class, based on a similarity measure. Studying the distribution of feature values of a specific class (type of PCB, objects like dogs, cats, faces, etc.) leads naturally to classification using machine learning methodologies. Employing such algorithms to classify a new sample follows a training stage, during which margins between groups are first taught to the machine and features are extracted and introduced to the classifier for each new image.

Object characterization by a combination of features points and their associated descriptors is also a common practice. Because features like peaks and edges focus on one aspect of the object, whereas SURF and binary features shed light on other aspects, newly examined image, in which the object might appear in arbitrary pose, should be naturally characterized by any possible means. It is then the responsibility of the algorithm developers to make sense of the extracted features value, type and their relationship, in order to tailor a classification process.

Beyond classification, image features are used for object matching. The possibility to reduce the dimensionality of an image or signal into several salient points, which characterize the image, leads to fast object comparison algorithms. Feature-based comparison finds its applications e.g. in searching for image copyright violations in the World Wide Web.

In the past few decades, features as characterization of object have found a permanent place in the computer vision toolbox. However, off the shelf methodologies rarely perform well without pre and post-processing stages. In many features, if not in all of them, parameters need to be tuned to allow intelligent feature selection based on their induced metrics and descriptors. Whether it is for classification purposes, image retrieval, characterization or comparison, features extraction is just one part of the pipeline: an efficient holistic solution needs the work of a computer vision expert. Please refer to our computer vision outsourcing works to learn how RSIP Vision’s expertise is just the right fit for your project.

Object characterization by a combination of features points and their associated descriptors is also a common practice. Because features like peaks and edges focus on one aspect of the object, whereas SURF and binary features shed light on other aspects, newly examined image, in which the object might appear in arbitrary pose, should be naturally characterized by any possible means. It is then the responsibility of the algorithm developers to make sense of the extracted features value, type and their relationship, in order to tailor a classification process.

Beyond classification, image features are used for object matching. The possibility to reduce the dimensionality of an image or signal into several salient points, which characterize the image, leads to fast object comparison algorithms. Feature-based comparison finds its applications e.g. in searching for image copyright violations in the World Wide Web.

In the past few decades, features as characterization of object have found a permanent place in the computer vision toolbox. However, off the shelf methodologies rarely perform well without pre and post-processing stages. In many features, if not in all of them, parameters need to be tuned to allow intelligent feature selection based on their induced metrics and descriptors. Whether it is for classification purposes, image retrieval, characterization or comparison, features extraction is just one part of the pipeline: an efficient holistic solution needs the work of a computer vision expert. Please refer to our computer vision outsourcing works to learn how RSIP Vision’s expertise is just the right fit for your project.