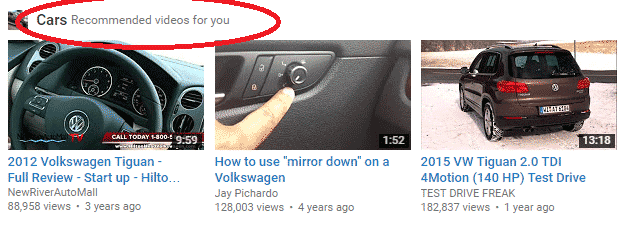

YouTube hosts more than 150 million videos, with an estimated 500 hours of videos uploaded to it every minute. Most of the videos have a duration below 10 minutes in length and are captured by amateurs under poor imaging and lightning condition, with little or no metadata. This huge quantity of videos needs to be processed, classified, tagged and ranked by users or by an automatic algorithm, before it can be used by a recommender system. The recommender system helps users having a set of preferences reach faster their preferred content and to navigate through the vast amount of visual content as smoothly as possible. By filling the landing page only with content deemed relevant to a specific user, recommender systems are used to optimize the valuable space dedicated by websites to recommended content for their visitors.

Recommendation systems are primarily built based on video textual information and video content similarity and then matched to user profile and history. The video features are used in the estimation of the “distance” of a user profile from them to come up with a set of best matches, under the form of other video content the user is most likely interested in.

In general, video recommendation usually works by mining users’ profiles and history and after that by ranking videos according to their preferences and viewing history. With each video ranked according to the search terms and user’s search queries, video recommendation can be regarded as a ranking task.

Collected user information (profile) includes education, background, occupation, philosophy, location, interests and more. Each item needs to be clustered and attributed to a predefined class, according to criteria of similarity with others. The video information includes its title, description, tags and category.

Several methods exist for recommendation:

1) Collaborative filtering is the most common approach in which users are first ranked for similarity; next, videos are suggested to them based on common interest among similar users. In collaborative filtering we encounter the new user problem (or the new user cold start problem), which consists in the difficulty of recommending a video to a new user with no previous activity. A second problem encountered in collaborative filtering is the new item problem, the difficulty of which stems from presenting any recommendation to new video entries than haven’t been rated yet.

One way to incorporate information about user and item is the use of social media. Social media collaborative filtering approaches usually inspect the user’s entourage, which then hints on user preferences. Close friends are natural choice in predicting user video preferences because most frequently they share common interests, less likely to be shared by random strangers.

2) One other recommendation strategy is the content-based filtering. In this approach, recommendation is made on the basis of user history: this method does not rank for similarity, which means that similar user data is not exploited for improving video recommendation.

3) A combination of collaborative filtering and content-based filtering is called hybrid filtering.

Incorporating social media information, strategies like “follow the leader” were previously devised.

Textural similarity between video and user information is formed by various distance measures, most commonly based on Gaussian distributions with the (normalized) Google distance between the set of keywords of user profile and video tags. Textural Google distance is defined as semantic measure derived from the number of hits returned by Google search for each given set of keywords.

The user’s viewing history can be of three types: recent history (last one viewed), short-term history (e.g. same day), and long-term history. Each video in the history list can be ranked according to its textural similarity (text-based video similarity), meaning a short distance between keywords or video tags and the user’s keywords or phrase. The distance measure can be once again defined by the Gaussian function with (normalized) Google distance between the search terms.

Another way to rank videos in the history lists is by content-based video similarity. The visual similarity between videos is estimated by extracting representative key frames from each video. Global visual features (like color moments, wavelet texture, and edge direction histograms) are extracted from each representative frame. The visual similarity between videos is estimated by the distance measure of their features.

RSIP Vision has worked on many projects in the field of visual search and video recommendation. You can read about some of them in the section dedicated to our projects.

In general, video recommendation usually works by mining users’ profiles and history and after that by ranking videos according to their preferences and viewing history. With each video ranked according to the search terms and user’s search queries, video recommendation can be regarded as a ranking task.

Collected user information (profile) includes education, background, occupation, philosophy, location, interests and more. Each item needs to be clustered and attributed to a predefined class, according to criteria of similarity with others. The video information includes its title, description, tags and category.

Several methods exist for recommendation:

1) Collaborative filtering is the most common approach in which users are first ranked for similarity; next, videos are suggested to them based on common interest among similar users. In collaborative filtering we encounter the new user problem (or the new user cold start problem), which consists in the difficulty of recommending a video to a new user with no previous activity. A second problem encountered in collaborative filtering is the new item problem, the difficulty of which stems from presenting any recommendation to new video entries than haven’t been rated yet.

One way to incorporate information about user and item is the use of social media. Social media collaborative filtering approaches usually inspect the user’s entourage, which then hints on user preferences. Close friends are natural choice in predicting user video preferences because most frequently they share common interests, less likely to be shared by random strangers.

2) One other recommendation strategy is the content-based filtering. In this approach, recommendation is made on the basis of user history: this method does not rank for similarity, which means that similar user data is not exploited for improving video recommendation.

3) A combination of collaborative filtering and content-based filtering is called hybrid filtering.

Incorporating social media information, strategies like “follow the leader” were previously devised.

Textural similarity between video and user information is formed by various distance measures, most commonly based on Gaussian distributions with the (normalized) Google distance between the set of keywords of user profile and video tags. Textural Google distance is defined as semantic measure derived from the number of hits returned by Google search for each given set of keywords.

The user’s viewing history can be of three types: recent history (last one viewed), short-term history (e.g. same day), and long-term history. Each video in the history list can be ranked according to its textural similarity (text-based video similarity), meaning a short distance between keywords or video tags and the user’s keywords or phrase. The distance measure can be once again defined by the Gaussian function with (normalized) Google distance between the search terms.

Another way to rank videos in the history lists is by content-based video similarity. The visual similarity between videos is estimated by extracting representative key frames from each video. Global visual features (like color moments, wavelet texture, and edge direction histograms) are extracted from each representative frame. The visual similarity between videos is estimated by the distance measure of their features.

RSIP Vision has worked on many projects in the field of visual search and video recommendation. You can read about some of them in the section dedicated to our projects.