Deep Neural Networks Used for Optical Character Recognition

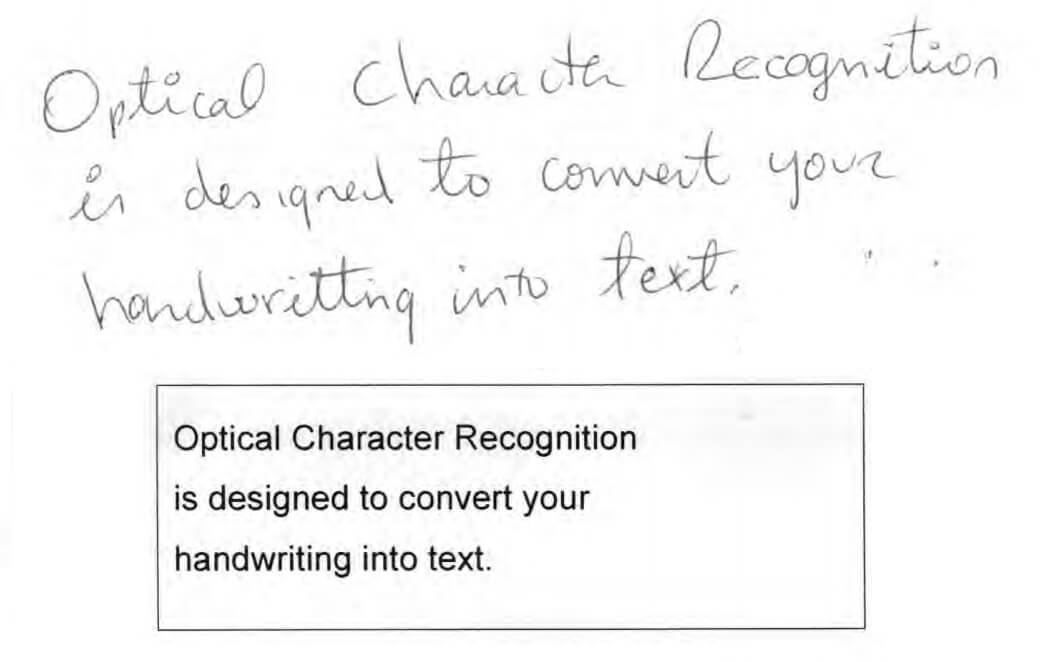

Optical Character Recognition (OCR) used for the visual inspection of documents has found wide application in both industry and research. The automatic recognition and analysis of printed characters by vision-based algorithms is still much more advanced and widely used than the handwritten one, mainly due to the difficulties in dealing with the variability in handwritten characters’ shapes and styles (see figure). It is thus a challenging effort to place handwritten OCR in a single context. Oftentimes tailor-made algorithms and adjustments are needed to utilize automatic text recognition of different languages; account for people’s different handwriting styles and text slant; as well as deal with technical imaging issues, e.g camera position, camera angle, uneven illumination etc.

Deep learning for OCR

The fast development of Deep Neural Networks (DNN) as a learning mechanism to perform recognition has gained popularity in the past decade. This popularity is owed primarily to the high accuracy DNN has achieved in both spotting text region and deciphering the characters simultaneously. Deep Neural Networks, or Convolution Neural Networks (CNN) are essentially multi-layered learning and feature processing neural networks. Each neuron (node) in each layer is fed with information passed from nodes connected to it. A processing mechanism (transfer function) then determines how much of the processed information will be passed to the nodes connected to the present one. The architecture of the network, that is, the way neurons and layers are connected, plays a primary role in determining the network’s ability to produce meaningful results.

The potential of DNNs in OCR has been widely demonstrated by many teams of researchers. Among others, an architecture for both text detection and character recognition was given by Jaderberg, Vadaldet and Zisserman. An implementation of DNN on GPUs has shown to speed up processing time and accuracy in an architecture devised by Ciresan et al. who trained and tested it against a deformed character dataset. Detection and OCR in natural scene images was performed by Jaderberg et al. which also may be applied to images and real-time video obtained by cellphones. For Latin and Chinese OCR see Cirasan, and for Telugu scripts, Achanta and Hastie. These examples are by no means exhaustive, interested readers are encouraged to refer to references within the articles mentioned.

References:

Jaderberg, Max, Andrea Vedaldi, and Andrew Zisserman. “Deep features for text spotting.” Computer Vision–ECCV 2014. Springer International Publishing, 2014. 512-528.

Cireşan, Dan C., et al. “Handwritten digit recognition with a committee of deep neural nets on gpus.” arXiv preprint arXiv:1103.4487 (2011).

Jaderberg, Max, et al. “Synthetic data and artificial neural networks for natural scene text recognition.” arXiv preprint arXiv:1406.2227 (2014).

Cireşan, Dan C., Ueli Meier, and Jürgen Schmidhuber. “Transfer learning for Latin and Chinese characters with deep neural networks.” Neural Networks (IJCNN), The 2012 International Joint Conference on. IEEE, 2012.

Achanta, Rakesh, and Trevor Hastie. “Telugu OCR Framework using Deep Learning.” arXiv preprint arXiv:1509.05962 (2015).