Using computer vision to identify tools being employed at different stages of a procedure is not only another step toward robotic surgery, it’s a simple, yet very useful tool to streamline and safeguard the surgical process.

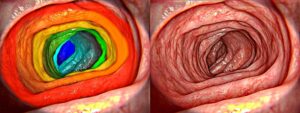

Surgical instrument (tool) segmentation and classification is a computer vision algorithm that complements workflow analysis. It automatically detects and identifies tools used during the procedure, and assess whether they are used by the surgeon correctly.

For example, it can detect the position of a tool relative to a target tissue, a lesion or growth, and using closed loop instrument control, direct the movement of the instrument. When combined with Surgical Workflow Analysis solutions, it will also alert if a wrong instrument is being used.

Tool segmentation with Deep Learning

In a manner similar to any type of image segmentation problem seen in deep learning, the goal of instrument segmentation is to paint image pixels belonging to surgical instruments. One of the most popular convolutional neural network architectures used for medical image segmentation problems is U-Net, which is also used extensively for instrument segmentation.

Ideally, surgical instrument segmentation will be used in real time, to identify tools being used as a surgery is being performed. In order to achieve this, neural networks need to be supplemented with additional routines optimized for speed.

The other challenge is being able to differentiate one tool from another by classifying and identifying each one. This is usually achieved by adding additional classes to segmentation neural networks, for example U-Net, and training the network accordingly.

While instrument segmentation based on a single frame achieves good results, a more robust solution is to combine instrument segmentations with workflow analysis. Identifying the tools from a given frame may be inaccurate, but by analyzing several consecutive frames, the accuracy improves. A result in one frame, should correlate with what we see in the subsequent one. Recurrent neural networks or Long Short-Term Memory (LSTM) networks can also be used to supplement convolutional neural networks and achieve better results.

Daniel Tomer, Algorithm Team Leader at RSIP Vision adds “A major challenge in tool segmentation, and in tool recognition in general, is finding a way to quickly train the model so it is able to recognize new tools. To tackle this challenge, we at RSIP Vision, develop for our customers advanced augmentations techniques which are able to generate synthetic data of any given tool and re-train the model using that data. In this way, adding a new ability to the model does not involve tedious and time-consuming data labeling.”

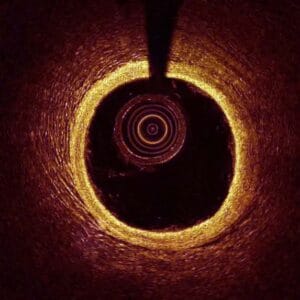

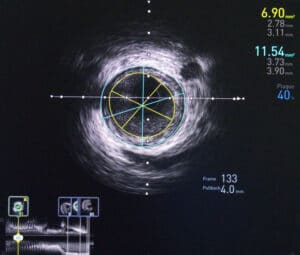

Endoscopy

Endoscopy