This article is the first part in a series of three articles about sensors used by the automotive industry to allow perception on autonomous vehicles.

RGB cameras, LiDARs and Radars are the three main sensors used by the automotive industry to maintain the perception for autonomous vehicles at various levels of autonomy. Each of these three different methods has advantages and disadvantages, so that the ideal system would be a combination of all three.

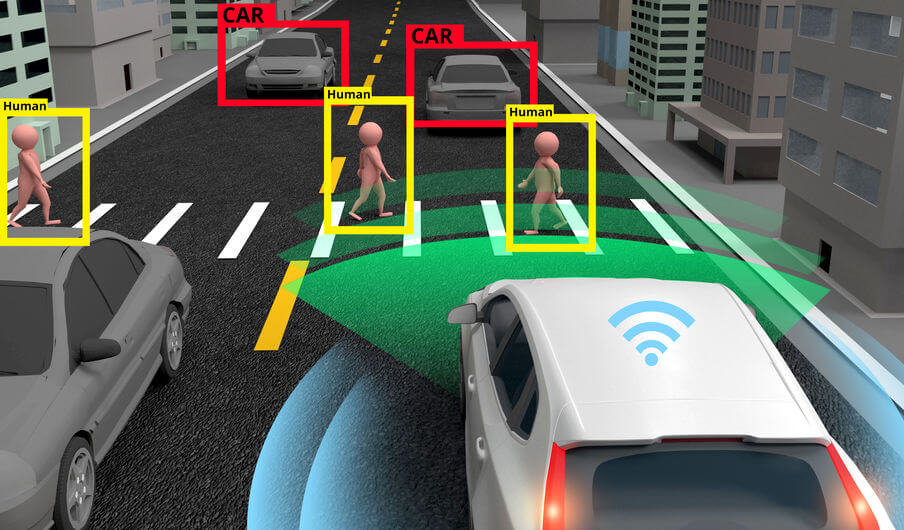

RGB cameras are similar to regular consumer cameras, like those that equip most smartphones. They are cheap and universally found in large quantities and in all kind of size and features. They are easy to use and their system is very simple. They can be adapted to any vehicle and any user can use them with no difficulty. For many years the fields of computer vision and image processing have used them to solve their problems. RGB camera can help us identify an object, separate it from the background and be used to understand a scene, since all the information is captured by the camera, including all the contours.

On the other hand, RGB camera’s performance drops dramatically under bad lighting conditions: in presence of a counter lighting, it sees a shadow and it cannot see the contours as before. Under stormy weather conditions like rain or snow, its power to see is limited like that of the human eye. In the same way, the human eye and the camera need additional lighting to be able to see. It is also challenging to measure distance using RGB camera: we end up using two RGB cameras in stereo, while at the same time we are constrained by the width of the car, so that we cannot expect much precision from that end. As a result, in good light and weather conditions, the camera can help us identify the object and give us a vague idea of its distance.

Read the second article (about LiDARs) and the third article (about Radars) in this series about sensors for ADAS.