Deep learning has been successfully applied in various applications in pulmonary imaging, including CT registration, airway mapping, real time catheter navigation, and pulmonary nodule detection. Some of these applications are still in ongoing development, and here we review few of the most recent papers in this field, in particular new models using deep learning in pulmonology.

1.1 Non-rigid registration

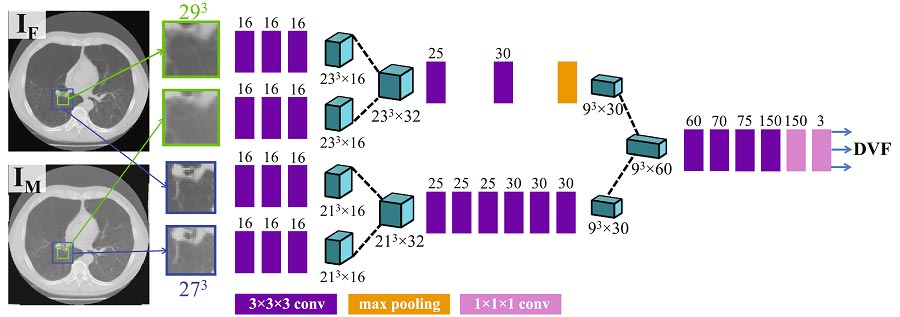

The problem of non-rigid CT registration is usually performed with classic optimization tools such as BSpline registration (for reviews of classic registration methods see [9, 4]). Deep learning methods have been studied in some registration contexts and have the potential to perform this task with higher speed (once trained), robustness, and accuracy. However deep learning has not yet become the standard framework for this task, and this is an active field of applied research. One example is [7], who show how to train a 3D CNN which they call RegNet to automatically estimate the displacement vector field (DVF) between two CT slices – a “fixed” image (IF) and a “moving image” (IM), which is a CT scan of the same patient taken at a later time as a follow-up. The figure below shows a schematic illustration of the network architecture. Available annotated training datasets for this task are typically small (the authors used 19 pairs of fixed and moving images), and the training of the network is performed on multiple randomized deformations of the input images. They show that the accuracy of RegNet with this synthetically generated training data is competitive with a conventional B-spline registration, and suggest that training with artificially generated DVFs is a promising approach for obtaining good results on real clinical data. Another advantage of this end-to-end deep learning approach is that no preliminary choice of a distance metric is required.

1.2 Airways segmentation

Airways segmentation and mapping from CT scans is a challenging task due to the multi-scale nature of the branching structure of airways, and the resolution limitation of CT scans. For this task, [3] use a hybrid approach, combining region growing and a 3D U-Net. An initial segmentation is performed with a standard region growing method, and then volumes of interest (VOI) are defined on this initial segmentation result according to the diameters and running directions of each airway.

After setting the VOI, a 3D U-Net is trained to perform a finer segmentation of these VOI. Due to computer memory considerations, this task is performed in separate volume patches and not on the entire volume, and at the last stage all the extracted airway regions are unified to form an integrated airways tree. The method was evaluated with 30 CT scans as a training set, and 20 scans as a test set, and achieved a Dice similarity score of 86.6%, outperforming the standard U-Net and other benchmarks.

1.3 Pulmonary nodule identification

Here we mention three recent works that suggest novel deep learning solutions for automatic identification and classification of pulmonary nodules – two crucial tasks for early diagnosis and treatment of lung cancer.

[1] use a combination of two networks for pulmonary nodule detection in CT Images. First they use a Faster R-CNN [5], based on the VGG-Net structure [6] for segmentation and detection of pulmonary nodules on CT axial slices, and then they use these as input for a 3D CNN for false positive reduction. This double-network method outperformed the best result of the LUNA16 Challenge.

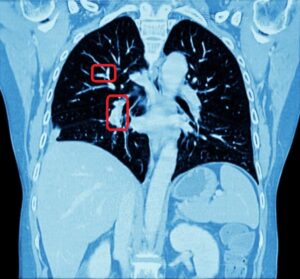

[2] address a different challenge on the same problem. They propose a weak supervision method for training Convolutional Neural Networks for pulmonary nodules identification in CT scans, based only on binary slice-level labels. Each CT slice is annotated as a “Nodule” or “NoNodule” image, and a CNN is trained on a binary classification task. Besides outputting the binary class distribution prediction, the network also outputs an activation intensity map that shows which region in the image indicates most strongly the existence of a nodule. This process produces a coarse probability map for nodule locations. The map is then processed in a method in the spirit of an empirical counterpart of influence functions: for every nodule candidate, the image is re-inserted to the network with a mask on the candidate location, and then the candidates for which the masking has maximal influence on the network output are chosen as the actual nodule locations. The method was evaluated on the LIDC-IDRI dataset and achieved similar Dice score to a U-Net that was trained with full voxel-level annotations (54 − 55% vs. 56% for the U-Net model). This approach of producing a probability map from a simple classification task is useful for other tasks as well, and enables to work with image level (instead of pixel or voxel-level) annotation on a wide variety of problems.

[8] use ensemble learning with three ResNet-50 models that are pre-trained on the ImageNet data for classification of benign versus malignant lung nodules in chest CT scans. The classification in their model is divided in five classes, ranging from benign to malignant. They show that this combination of pre-training with a weighted network-ensemble prediction achieves 93.4% accuracy in the classification task on the LIDC-IDRC dataset – a 6% improvement over previous benchmarks.

Deep Learning in Pulmonology – References

(*) Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D.L., Duchesne, S. (eds.) Medical Image Computing and Computer-Assisted Intervention MICCAI 2017. Springer International Publishing, Cham (2017)

[1] Ding, J., Li, A., Hu, Z., Wang, L.: Accurate pulmonary nodule detection in computed tomography images using deep convolutional neural networks. Printed in (*) pp. 559–567.

[2] Feng, X., Yang, J., Laine, A.F., Angelini, E.D.: Discriminative localization in cnns for weakly-supervised segmentation of pulmonary nodules. Printed in (*) pp. 568–576.

[3] Meng, Q., Roth, H.R., Kitasaka, T., Oda, M., Ueno, J., Mori, K.: Tracking and segmentation of the airways in chest ct using a fully convolutional network. Printed in (*) pp. 198–207.

[4] Oliveira, F.P., Tavares, J.M.R.: Medical image registration: a review. Computer methods in biomechanics and biomedical engineering 17(2), 73–93 (2014)

[5] Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems. pp. 91–99 (2015)

[6] Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

[7] Sokooti, H., de Vos, B., Berendsen, F., Lelieveldt, B.P.F., Iˇsgum, I., Staring, M.: Nonrigid image registration using 2 multi-scale 3d convolutional neural networks. Printed in (*) pp. 232–239.

[8] Xie, Y., Xia, Y., Zhang, J., Feng, D.D., Fulham, M., Cai, W.: Transferable multi-model ensemble for benign-malignant lung nodule classification on chest ct. Printed in (*) pp. 656–664.

[9] Zitova, B., Flusser, J.: Image registration methods: a survey. Image and vision computing 21(11), 977–1000 (2003)

Pulmonology

Pulmonology